Hard Disk Drive is generally used as a secondary storage medium and imposes latency in data storage and retrieval due to its mechanical nature. To overcome the latency to some extent a component called Cache, which is usually of smaller size but on a faster storage medium, is used to store data so that subsequent requests can be served faster. On the other hand, Solid State Drives, which are non-volatile flash memory, have much lesser latency compared to Hard Disk Drives but come at a higher cost. The idea presented herein is to make use of one or more Solid State Drives to form a cache component which is relatively faster as well as can hold Gigabytes of hot data. A similar solution is implemented along with other enhancements in StorTrends. People having interests and knowledge in Storage concepts such as HDD, SSD, RAID, Volumes, IO patterns, Logical Drives, Containers, JBODs, and Cache would be inquisitive to know more about such a solution.

Currently, cache is usually implemented by reserving some portion of the system memory (RAM) and is used for storing the reads and writes. At some point this cache would be full and there will not be space for accommodating a new IO request and some previous request needs to be flushed back to the HDD and the new request is stored in the cache. The replaced request can either be Least Recently Used (LRU) or Least Frequently Used (LFU). And with Terabytes of underlying storage, this small portion of memory could get filled up in seconds. One other form of cache, called as Disk Cache, is embedded in the HDD itself, which will act as a cache. However, these cache sizes are limited and can be only of few KB or MB. One other problem is that the cache implemented using RAM is volatile i.e. the data present in the memory will be lost during a power failure. It needs additional component like an UPS to guard against data loss across a power failures. Hence, the two major problems with the current cache implementations are

- Limited cache capacity

- Volatile nature

These problems can be addressed by using SSD as cache. By using SSD, a cache size in the order of Gigabytes can be achieved easily, and at the same time the data stored in these drives are non-volatile. Using more than one SSD drive for caching, not only improves performance, but also results in higher capacity for cache. In addition to solving the above mentioned problems, SSD has additional advantages over HDD like

- No movable components and thus

- Less noise

- Less heat emitted

- Less power consumption

- More susceptible to shocks

- Much lower Seek time and Latency

- Smaller in size and less in weight

Nonetheless, SSD has the following disadvantages

- Cost of SSDs are much higher than traditional HDDs

- The maximum capacity of commercially available SSDs are still much smaller than HDDs

- The number of write cycles of SSDs are limited and hence they are much less reliable and have lower endurance

Thus a combination of HDDs and SSDs are required to balance the end users trade-offs of capacity vs. performance. Using SSDs to cache the data stored on HDDs offer a better implementation of cache compared to traditional caching technologies that make the storage arrays perform better while keeping the costs down. By taking these points into consideration, an effective implementation of SSD caching has been done on StorTrends, which boosts up performance by allowing both read and write cache and provides additional benefits such as Hot Plug, Endurance Monitoring, etc.

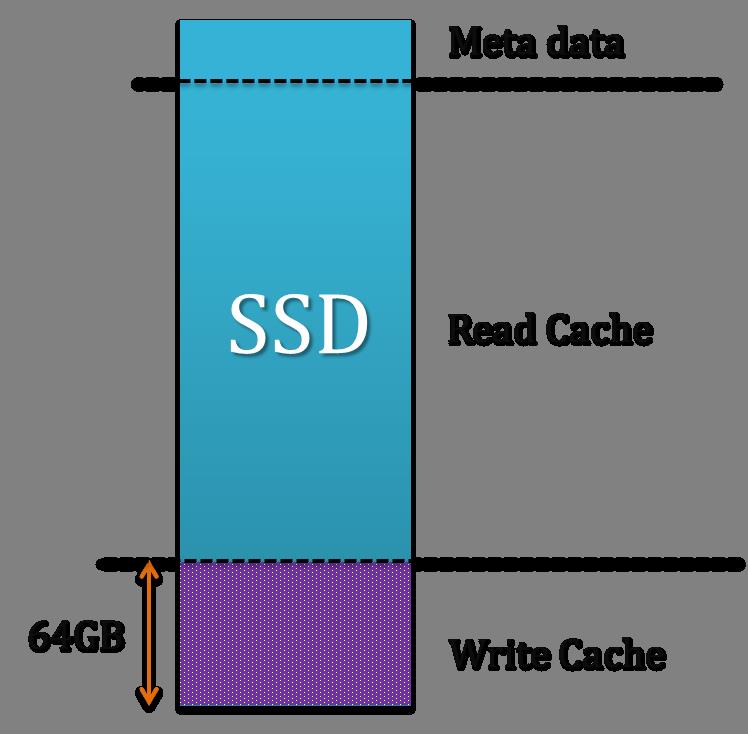

StorTrends supports at least two SSD drives to be used as Cache, as with the current implementation. Each SSD drive is logically split into the following

- Meta data area contains mapping information that help in mapping the blocks on SSD to the corresponding blocks in the HDDs for which the data is being cached

- Read Cache is a striped caching area which is used to minimize read latencies

- Write Cache is used to minimize write latencies by staging the writes temporarily before flushing them to the HDDs. Thus application writes are faster. The write cache is maintained as a mirror on both the drives to protect against the failure of any one of the drives.

- The SSD cache split is shown in the following diagram

The SSD cache would remain operational even if one of the drives fail. Since that a single drive failure is supported, the SSD cache can operate in any of the following two states based on the failure and re-addition of drives.

- Optimal - when both drives are present and fully functional

- Degraded - when one of the drive has failed or is being rebuilt.

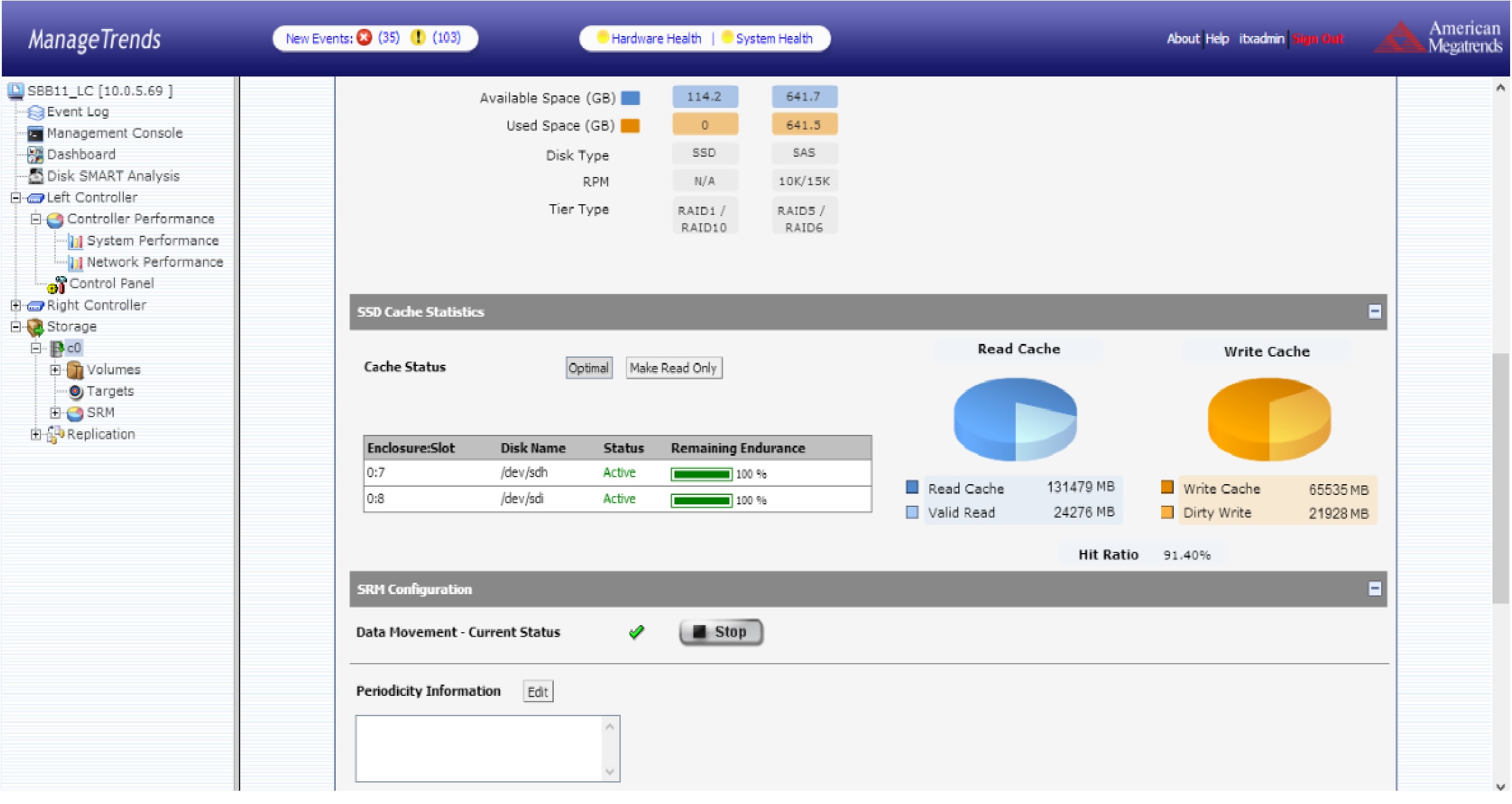

In addition, StorTrends implementation of SSD cache provides additional features to the users for better management and monitoring of the Cache. The currently available features are

- Ability to hot unplug and hot-plug drives in SSD cache

- Volume specific IO acceleration where specific application LUN data are cached

- Endurance monitor to track the endurance of the SSD drives and alert if any of them is likely to fail